If Account-Level Quality Score Doesn’t Exist, Can It Really Hurt Your AdWords Program?

Google has continually denied that an account-level Quality Score exists -- yet this unseen metric still seems to have an impact on AdWords performance.

While most search marketers will agree that AdWords Quality Score (QS) primarily reflects how a keyword’s click-through rate (CTR) is expected to compare to its competition under the same circumstances, Google has left enough ambiguity in the finer points of Quality Score that it continues to be a rich area for research and debate.

One of those finer points has long been the notion of an account-level Quality Score and how much impact it has on individual keywords, particularly low-traffic ones.

Last year, former Googler and Search Engine Land columnist Fred Vallaeys, as part of larger, more nuanced piece, offered that “technically, Google does not have a metric called account-level QS.” In its recent white paper, “Settling the (Quality) Score,” Google bluntly confirmed this, saying, “There is no such thing as ad group-level, campaign-level or account-level Quality Score.”

Okay, case closed, right? Well, not quite.

There are still plenty of folks out there that will tell you account-level Quality Score, or at least something that looks a lot like it, does exist and can have a major impact on the performance of your program. Wordstream’s Larry Kim has been treading in these waters quite a bit recently, so I recommend you read a couple of his latest posts for that perspective.

Though Google rejects having an account-level Quality Score, this could just be semantics, and they leave some wiggle room on this issue by revealing in their whitepaper that, at least for “newly-launched keywords,” the performance of “related keywords” does matter:

Instead of measuring new keywords from scratch, we start with info about related ads and landing pages you already have. If your related keywords, ads and landing pages are in good shape, we’ll probably continue to have a high opinion of them.

This makes sense. If Google needs help predicting the CTR of any keywords, it will be those with little to no history. It would follow that the fewer impressions any new keyword has, the more reliant Google would be on using data from related terms.

So what do the data for newly-launched keywords tell us?

Comparing The Quality Score Of New Keywords To The Entire Account

While an account-level Quality Score number may not exist as an official entity in AdWords, we can easily come up with an impression-weighted average of all of the keywords within an account to serve as a benchmark for one.

Looking at data from July 2014, I did just that for about 40 fairly large accounts and found that the average “account-wide” Quality Score was a little under 7 (6.7, to be exact). I also pulled the initial Quality Score (after one day of being active) given to any new keywords added during the same month for the same programs. From there, we can start to look at some comparisons.

If Google is giving account-wide Quality Score performance a lot of weight in the Quality Score they assign to new terms, particularly those with low traffic, we’d expect to see a pretty strong correlation between the scores of the account and the new keywords on average.

Running these numbers for the programs I looked at and limiting the comparison to new keywords with fewer than 10 impressions a day, there’s a clear connection here, but there’s also a lot of noise around the linear trend line. Our R squared value is 0.36:

Limiting To Non-Brand Keywords Only

We know that the keywords a site runs for their our own brand name almost always have a much higher CTR than the competition and tend to receive a high Quality Score as a result (usually a 10 in the score Google passes to advertisers). Brand keywords also have much higher CTRs than the non-brand keywords in the same account.

Surely Google must at least be able to distinguish brand from non-brand, maybe not by analyzing the keyword text itself, but the performance differences should stand out dramatically.

Again, assuming that Google is using the fairly blunt mechanism of an account-wide Quality Score in a heavy-handed way, they should still be able to prevent brand keywords from influencing the Quality Scores of new non-brand keywords.

If they were doing so, we’d expect to see a better correlation between new non-brand keyword QS and account-wide non-brand QS. In fact we do, and our R squared rises a bit to 0.44.

Since the correlation was worse when we included brand keywords, it stands to reason that advertisers shouldn’t count on their brand terms to lift up the QS of their non-brand terms. In fact, sites where brand has the greatest impact on account-wide Quality Score often have lower non-brand Quality Score — the logic being that if their non-brand QS were higher, they’d be getting more impressions from non-brand terms.

Again, we are looking at new keywords with fewer than 10 impressions per day here on the assumption that Google would need the most help in predicting CTR for just such terms. But, if we do the same comparison with new keywords that received more than 10 impressions per day, we actually see a better correlation with an R squared of 0.55:

This suggests my assumption that account-level data would be more valuable for predicting CTR for low traffic terms is wrong, or it’s a good reminder that correlation does not imply causation.

In other words, the QS for the account may be similar to that for new keywords on average, and even more similar for higher traffic keywords, but there are a lot of good reasons that would be the case that don’t require account-level data being a big factor in the QS of new keywords. Here are a few:

- Chances are that the advertiser is adding keywords that are, on the whole, actually very similar to those already in the account.

- The copy is also likely to be of similar quality and appeal to searchers.

- The advertiser’s brand recognition can have a huge influence on CTR and therefore QS. All else being equal, a well-known site is likely to hold a considerable CTR advantage on its lesser-known competition. Even with a fairly small data set for any given keyword, Google should be able to see this difference pretty quickly.

- Averages lie.

Averages Lie, Or Can At Least Mislead

The results above show a decent connection between account-wide Quality Score and the average Quality Score for new keywords, even if that relationship isn’t necessarily causal. But, they also present a pretty misleading perspective of how predictive account-wide Quality Score is for any individual keyword.

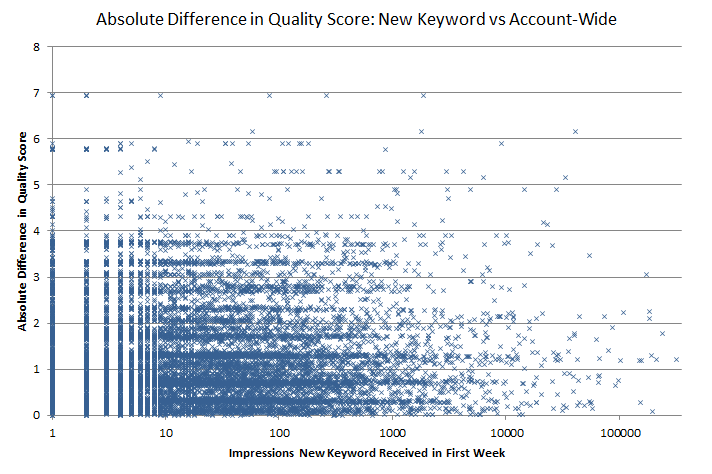

Looking at the absolute difference between the initial Quality Score of individual non-brand keywords and the Quality Score covering all non-brand keywords in the same account, we see a wide range of results across all traffic levels.

This is very noisy stuff. You would need a beautiful mind to see a pattern here. Boiling it down a bit though, on average an individual new keyword’s QS deviates from the rest of the program by about plus or minus 1.4 points across all traffic levels. About a quarter of keywords differ by more than 2 points, an eighth by more than 3.

You get roughly the same numbers if you do a similar comparison, but against an assumed “default” Quality Score of 7 instead of the account’s actual overall score. In other words, if I asked you to guess the Quality Score of a random new non-brand keyword, you would have about the same luck just guessing “7” as guessing the account-wide score for non-brand terms.

Can we make the average difference smaller by tightening the groups we are comparing our new keywords to? Absolutely.

For instance, comparing the Quality Score of a new keyword to the combined score of the other keywords going to the same landing page yields an average difference of a little less than 1 point, and it’s a good bet that Google has an even more sophisticated approach in determining the “related keywords” they use to guide QS for new terms.

Google Has The Ability & Incentive To Predict CTR Well

Perhaps the best argument that you shouldn’t worry about low QS keywords hurting the rest of your account is that Google has a very strong financial incentive to predict CTR well even when the data is thin.

Misjudging the expected CTR of a new keyword means fewer clicks for Google, whether that is a result of not showing a good ad often enough, or showing a poor ad too prominently.

And Google predicting CTR with thin data is not all that different from an advertiser trying to predict conversion rate or sales per click in order to determine an appropriate keyword bid. At my company, RKG, we know the latter can be done well, even without the vast team that Google has at its disposal.

Sophisticated bidding platforms handle the problem of thin data by taking into account the performance of keyword “cousins” in order to get to statistically significant levels of data. The secret sauce is in how exactly you do this, but generally it is fair to say that the closer the groups you use for prediction the better.

If Google is using account-wide data as major factor in initial QS, chances are it is only when there isn’t a viable more granular option. For large advertisers this is going to be rare, but it could be more material for smaller programs, assuming it happens at all.

So What Do You Do About Keywords With Low Quality Scores?

Clearly, there’s a benefit to raising your CTR, and in turn, Quality Score, as long as you can do so without hurting the conversion side of the equation. Ongoing copy testing and analysis that takes into account both some notion of profit per impression and volume, is a fundamental element of a well-managed search program; but as with pretty much anything else, there’s going to be diminishing returns.

Deleting low Quality Score keywords, just because they have a low Quality Score, in the hopes that you will see a net benefit sounds like a risky endeavor to me, particularly if those keywords are generating conversions or at least hold the potential to do so.

The connection between the QS given to new keywords and that of broad existing keyword groupings appears to be tenuous and Google has powerful incentives to assign QS with the type of precision that isn’t so easily manipulated.

Now, if we were talking about Bing Ads, this whole story could be very different, but that is a topic for another day.

(Stock image via Shutterstock.com. Used under license.)

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land