Is Google Hijacking Semantic Markup/Structured Data?

In 2012, I started a series, How The Major Search And Social Engines Are Using The Semantic Web, which took us to a point in time around September 2012. Since then, there have been further interesting developments. In this article, I am going to focus on recent developments that are search engine and/or Google specific, […]

In 2012, I started a series, How The Major Search And Social Engines Are Using The Semantic Web, which took us to a point in time around September 2012. Since then, there have been further interesting developments.

In this article, I am going to focus on recent developments that are search engine and/or Google specific, then take a further look back in search engine history with the assumption (for you history and strategy lovers,) that a successful strategy used once, may well be used again in similar circumstances.

Image via presentermedia.com under license

Google & The Semantic Web

In the interim, since September of 2011, Google has taken increasingly more steps in becoming more semantic-web like in nature and in the migration of its SERPs, resembling those of an answer engine.

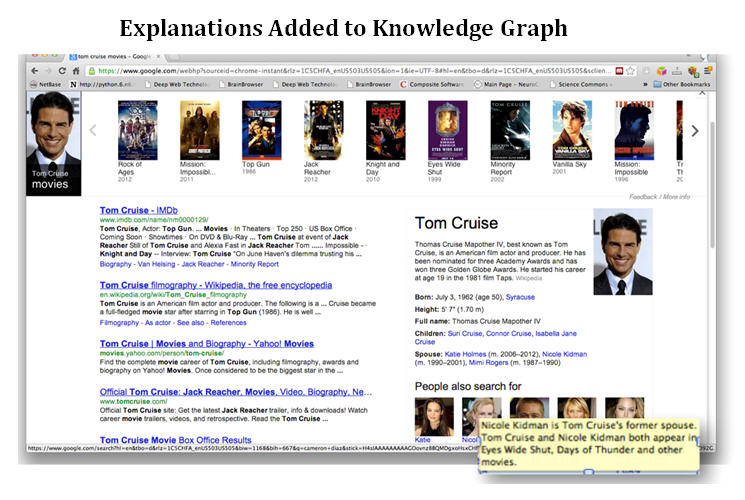

For example, Google has added explanations to the Knowledge Graph. Subsequent to that, on November 9th, Google adopted GoodRelations as part of schema.org. Aaron Bradley wrote an excellent article about that, and you can read more details here if you missed it.

The Knowledge Graph was rolled out globally, on December 4, 2011, and various interesting changes were made to flight search and associated activities. All very interesting; however, not exactly earth shattering, but well worth noting.

Google Knowledge Graph

Google Data Highlighter

On December 12, 2012, Google rolled out a new tool, called the Google Data Highlighter for event data. Upon a cursory read, it seems to be a tagging tool, where a human trains the Data Highlighter using a few pages on their website, until Google can pick up enough of a pattern to do the remainder of the site itself.

Better yet, you can see all of these results in the structured data dashboard. It appears as if event data is marked up and is compatible with schema.org. However, there is a caveat here that some folks may not notice.

No actual markup is placed on the page, meaning that none of the semantic markup using this Data Highlighter tool is consumable by Bing, Yahoo or any other crawler on the Web; only Google can use it!

Google is essentially hi-jacking semantic markup so only Google can take advantage of it. Google has the global touch and the ability to execute well-thought-out and brilliantly strategic plans.

Image via presentermedia.com under license

You may think, how odd, why would Google go to all that effort to create standards for schema.org for all three search engines, (actually four at this point: Bing, Yahoo, Yandex and Google), and then create a tool useful only for Google? It appears such a charitable gesture on their part.

A Little Google History On Sitemaps & Schema.Org

Perhaps some history can help us understand this better. Schema.org was first announced on June 2, 2011 as the first time the three major search engines came together to produce a standard since sitemaps.org. Depicted below are the home pages of both schema.org and sitemaps.org. Note the striking similarity.

Sitemap.org & Schema.org Homepages

Even the terms and conditions appear similar, as can be seen in the figure below. Ok, so now you may be thinking, so what, they used the same template. It is much more interesting than that. As you know, Google is brilliant at applying successful strategies, and if it succeeds once, it is very likely to pursue that same tactic again. (All you historians out there know how history tends to repeat itself). Perhaps a look at where Google is going with the structured markup can be determined by what they did with sitemaps.org.

Robots.Txt File

On a similar note, www.example.com/robots.txt is a standard location for a sitemap to reside; however, Google provides the option to submit the sitemap directly. (Most search engines do, actually). Given that the search engines allow direct sitemap submission (and may even prefer it), it’s interesting in that there is no longer an actual need for a robots.txt file. Many sites do not have them.

In some sense, however, this is strongly reminiscent of the action being taken by Google with regard to the Data Highlighter for events. Net effect: it makes this information available to Google, but not necessarily to others.

Although up and down in certain situations, there seems to be a lot of controversy around this fact, you can see a recent example noted in Matt McGee’s article. (For the rest, I will leave it to historians to make the projections.)

Historically, it also seems, for both search engines and websites/webmasters alike, best practice is to adhere to standards, as that is what best practices ultimately boil down to and why standards are so important! Ultimately, they should be enforceable (one would assume).

How Small Merchants Can Remain Competitive In Google Shopping

Small Merchants can remain competitive in Google Shopping by adding rich snippets and more. Below are some mechanisms worth looking into, as it appears Google is facilitating the small merchant in Google Shopping to retain inventories that include more eclectic and interesting product offerings!

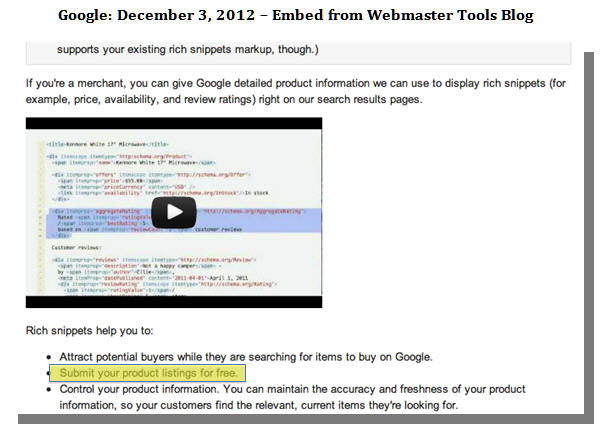

If you understand the big picture, it is easier to predict not only what to do, but how and why with regard to getting good SERP visibility. For example, I am embedding a portion of Google’s webmaster advice on rich snippets and products. You can find the link here. I checked the last update, which was December 3, 2012.

If we are to believe what we see in Google Webmaster Tools above, it means you can get into Google Shopping free using Structured Markup! If anyone experiments with this, I would love to encourage responses in comments.

Considering how fast things are changing with schema.org microdata, there is a need for automation to remain both competitive and compliant.

On Google’s part, this would offer an ideal mechanism for small business owners with unique, boutique-style products or eclectic products to enter Google Shopping. This would enrich and augment the current inventory on Google Shopping. On the part of the small merchant, it would now be necessary to strongly consider this option.

On a similar note, I also found this link to be of interest on Google, posted the same day! It is a great guide on best practices. Note the sitemap and robots.txt stipulations as well as those on rich snippets. It also was last updated December 3, 2012.

It may be, that much of this falls on the heels of Microsoft’s Scroogled campaign, where in addition to much else, they comment on PageRank being replaced by Pay to Rank as an algorithm. It may have elements of truth, but PageRank is now reduced to only 1 of a couple of hundred signals Google uses to rank pages.

Essential & Professional Practices For 2013

Based on the information above, it seems to me that retailers can profit by following my suggestions below.

- Stick to industry standards and standards recommended by the search engines.

- Always provide unique and interesting content on your pages.

- Keep your information fresh and relevant.

- Provide clean sitemaps and make use of the <lastmod> feature.

- Mark up your pages for rich snippets, if you are not sure how to do that, engage experts to help you or look for automated software that can provide the same!

Lastly, in terms of 2013 predictions, count on the Data Highlighter being expanded to more than just events!

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land