Should We Disavow Links To Combat Negative SEO?

The Negative SEO conversation has truly been re-ignited and has everyone debating its affect again. Conversations are exploding with claims of lawsuits, paying people to remove backlinks, and just blowing up the site and starting all over. Fair enough. But the way I see it, there is really a simple solution to this issue. Disavow […]

The Negative SEO conversation has truly been re-ignited and has everyone debating its affect again. Conversations are exploding with claims of lawsuits, paying people to remove backlinks, and just blowing up the site and starting all over. Fair enough. But the way I see it, there is really a simple solution to this issue.

The Negative SEO conversation has truly been re-ignited and has everyone debating its affect again. Conversations are exploding with claims of lawsuits, paying people to remove backlinks, and just blowing up the site and starting all over. Fair enough. But the way I see it, there is really a simple solution to this issue.

Disavow Links

I wrote this in a comment on SERoundtable and Barry Schwartz beat me to the punch recently. Danny also addressed it in passing in a Q&A with Matt Cutts, but I wanted to elaborate on this even more.

Think of this as an open letter to Google, or just a general expression of how I feel about this conversation. It honestly doesn’t matter to me. The bottom line is that I think this issue could easily be avoided and search engines should have no issue implementing this.

What I think Google in particular is failing to realize, is that there are good SEO’s out there that want to help them succeed. I mean, of course we all want our clients to out rank their competitors, but there is a big part of us that I think Google has “won over”, so helping them improve their engine, would be a win for not just our clients but the SEO community as a whole.

How I See This Working

We submit things to Google all the time. We submit, XML sitemaps, robots.txt files, and we even submit reconsideration requests and report shady practices when we see them happen. So to some extent, we do have an element of conversation with them. Sometimes it may feel one-sided, but I think they are listening.

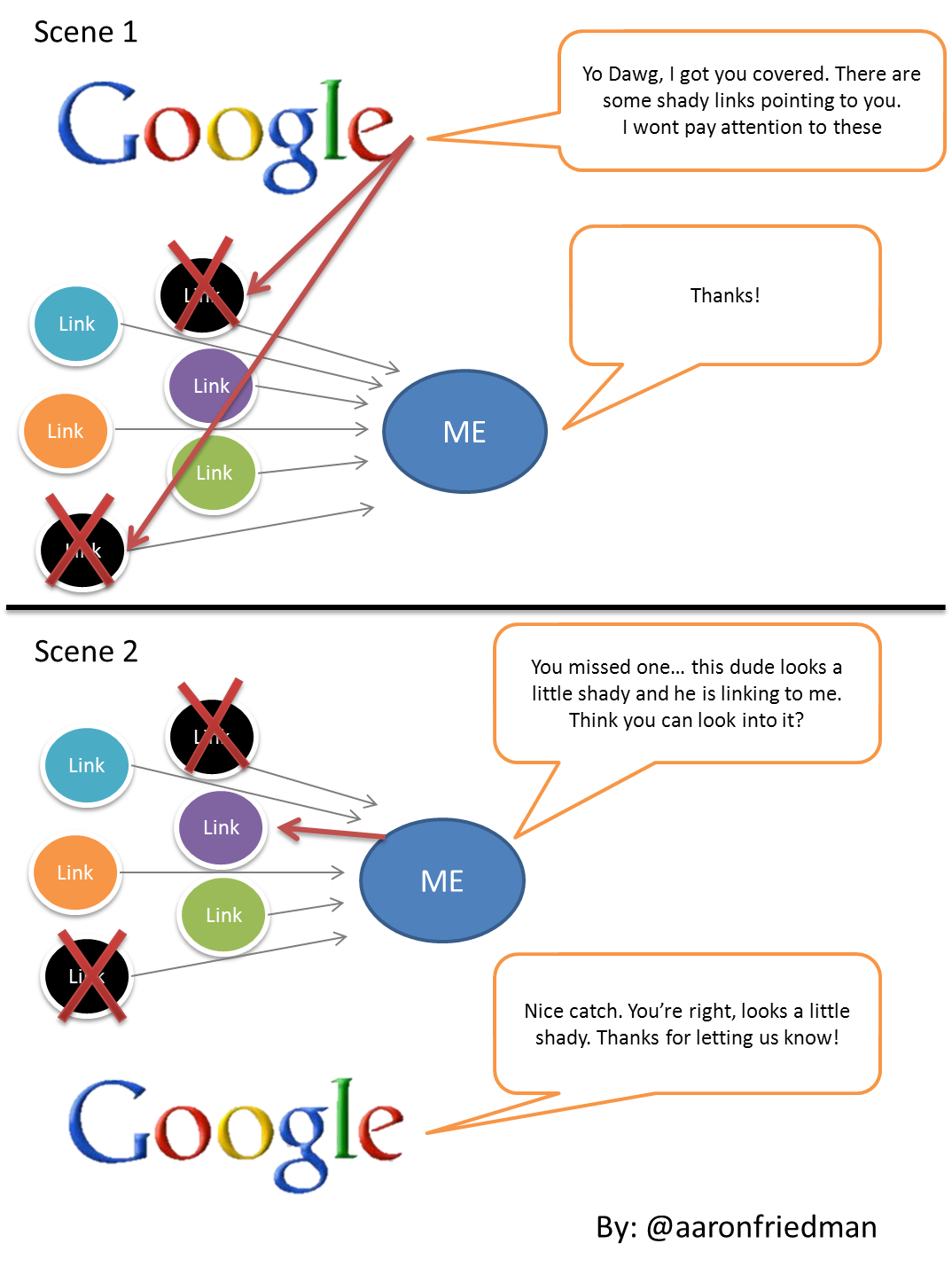

Why not submit a list of shady links which point to our site? And I don’t mean just in Webmaster Tools. I mean, webmasters could create a page for all engines to publically crawl. Call it “yoursite.com/badlinks.xml” (not very clever, but you get the idea).

Let Webmasters Be Proactive

The engines aren’t perfect, and undoubtedly miss things. Eventually they will catch up, but why not let webmasters be proactive? This would accomplish 2 things:

- It would make people more selective about dishing out links, which actually would make getting a link that much more valuable.

- It would give Google a dedicated page to crawl which provides them this information. In essence, they are getting great information and pawning off the work to webmasters who will be doing their dirty work for them.

The engines are already really good at crawling the Web, and are aware of the links that point to our sites. Why not match it up?

The Formula Is Simple

If a site points to me, and I as the webmaster think it looks shady, give me an opportunity to block it.

There is of course the possibility of spammers figuring out other alternatives and taking advantage of the system. But linking is already in trouble and spammers have already been taking advantage of this for years.

I humbly think this would accomplish far more than any other method. It lets us proactively and taking away the guesswork from the engines. This would make linking across the Web more effective, safe, and ultimately put this conversation to rest.

Because really, in today’s day and age, it’s ridiculous that Negative SEO should be part of the conversation. The engines should be smarter than that, and I believe they are. With the help of the good guys, we can help them refine the link graph and make it more accurate.

What do you think search engines should be doing to combat negative SEO?

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories