With Panda in stealth mode, why Google’s quality updates should be on your algorithmic radar [Part 1]

What are Google's quality updates (aka Phantom updates), and how can you recover? In part one of a two-part series on Phantom, columnist Glenn Gabe explains the history and possible mechanics of these algorithm updates.

In May of 2015, I uncovered a major algorithm update that I called “Phantom 2.” It ended up being a huge update that impacted many websites across the web globally. I’ll explain why it was named “2” and not “1” soon. Google first denied there was an update in early May 2015, but then finally explained that they did indeed roll one out. They said it was a “change to its core ranking algorithm with how it assesses quality.”

And with Google always looking to surface the highest-quality, most relevant information for users, that statement was incredibly important. This led the folks at Search Engine Land to call the May 2015 update Google’s “Quality Update.” (I still like Phantom, but hey, I’m biased!)

Note, and this is important: I called the update “Phantom” simply because it was a mysterious one. Google has not officially named the update Phantom, and from what I gather, they don’t like the name very much at all. So for the rest of the post, both Phantom and Quality Update will be used interchangeably.

In part one of this series, I’ll cover an overview of Google’s quality updates, the history and frequency of updates, how I believe the updates are working and rolling out, and some of their characteristics.

Then in part two, I’ll cover a number of problems I’ve seen across sites that have seen negative impact during quality updates. In that post, you’ll learn why “quality user engagement” is a big deal when it comes to Phantom.

First, a note about Panda

When most people in the industry hear “quality,” they think about Google Panda. But Panda has changed, especially since the last old-school Panda update, which was October 24, 2014. After that, we had Panda 4.2 in July of 2015, which was a long, slow rollout due to technical problems. After heavily analyzing that update, I called it a giant ball of bamboo confusion.

Then in early 2016, we learned that Panda was now part of Google’s core ranking algorithm. And with that, we would never hear about another Panda update again. Sad, but true. Moving forward, each Panda update would get prepped and then slowly roll out (without Google announcing the update). And each cycle can last months, according to Google’s Gary Illyes. Therefore, it’s very hard to tell if Panda is impacting a site. That’s a big change from Panda of the past.

I’ve helped a lot of companies with Panda since 2011, and I have access to a lot of Panda data (sites that have dealt with Panda problems since 2011). Since Panda 4.2, the only update that looked Panda-like to me was the March 2016 update. And I can’t say for sure if that was Panda; it just looked more like Panda than a quality update (AKA Phantom).

So, while many still focus on Google Panda, we’ve seen five Google quality updates roll out since May of 2015. And they have been significant. That’s why I think SEOs should be keenly aware of Google’s quality updates, or Phantom for short. Sometimes I feel like Panda might be doing this as Google’s quality updates roll out.

Source: Giphy.com

Quality updates are global, domain-level and industry-agnostic

Since May of 2015, I have been contacted by many companies that have been impacted by Google’s quality updates from almost every niche and from many different countries. So, as Google has stated before about major algorithm updates, quality updates are global and do not target specific niche categories.

For example, I’ve heard from e-commerce retailers, news publishers, affiliate sites, Q&A sites, review sites, educational sites, food and recipe sites, health and wellness sites, lyrics websites and more. Based on my analysis of sites impacted positively and negatively, Phantom is keenly focused on “quality user experience,” which can mean several things. Don’t worry, I’ll cover more about that soon, while also diving much deeper in part two of this series.

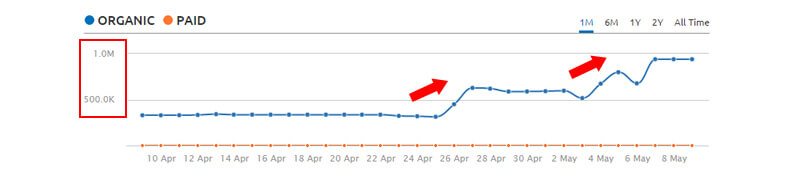

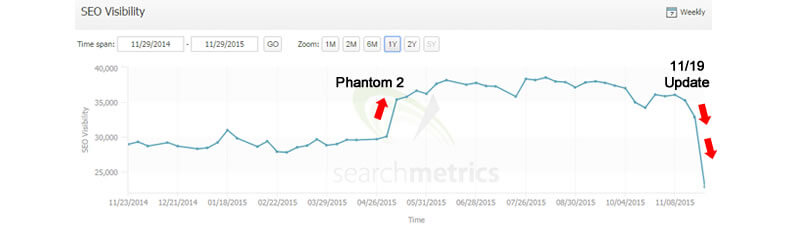

Also, the impact from Google’s quality updates (Yes, there have been several) looks like it’s domain-level. It does not seem to employ a URL-by-URL algo adjustment like the mobile-friendly algorithm. When a site doesn’t meet a certain quality threshold, all hell can break loose on the site. That includes a drop in rankings, loss of traffic, loss of rich snippets and more. For example, here are changes in search visibility for sites impacted by Phantom 2 in May of 2015.

The history of quality updates — Phantom’s path since 2015

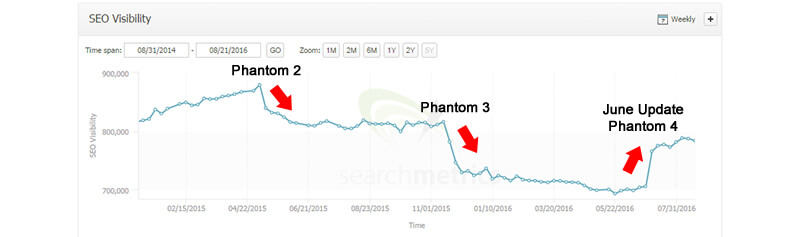

Since Google rarely comments on its core ranking algorithm changes, the following history is based on subsequent algorithm updates that were connected to Phantom 2 in May of 2015. For example, here’s a great visual of a site experiencing impact across the various quality updates I’ve picked up.

It’s also worth mentioning that Google pushes between 500 and 1,000 updates per year. There are small updates that go unnoticed, and then there are major changes that are substantial. Quality updates would fit into the latter category. Also, major algorithm updates typically occur only a handful of times per year. And again, quality updates seem connected to one another. I’ll provide more screen shots of the connection later in this post.

Recovery or additional impact during quality updates

When Phantom 2 rolled out in May of 2015, companies that had been impacted frantically worried about the potential for recovery. Could they recover lost rankings and traffic, was it a permanent adjustment, and how long would it take to recover?

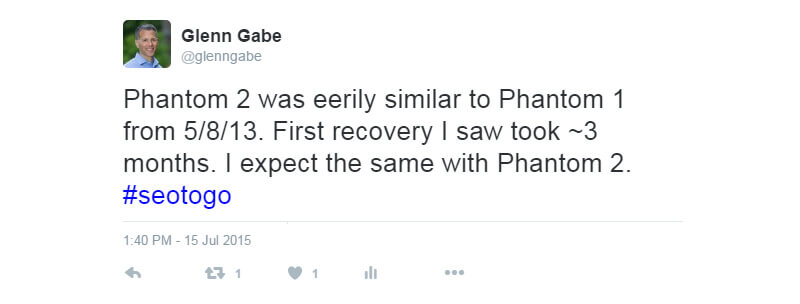

Well, I had seen an update like Phantom 2 before, in May of 2013, which was the original Phantom (Phantom 1 if you’re keeping track). Interesting side note, both Phantom 1 and 2 rolled out in May. Weird, but true.

Based on helping a number of companies with that update in 2013, I saw the first recovery from Phantom 1 about three and half months after it rolled out, and more sites recovered as time went on. Given that experience with Phantom 1, I had a feeling that fixing core quality problems could yield recovery from Phantom 2 as well. But, it would take several months before that happened. Here’s a tweet of mine after Phantom 2 rolled out.

September 2015

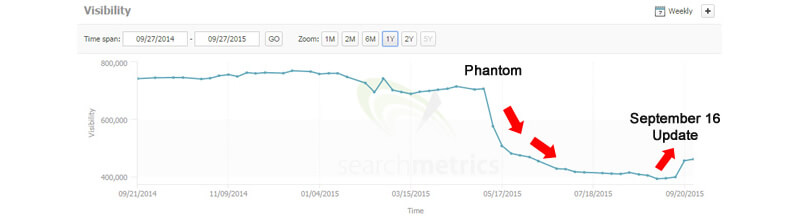

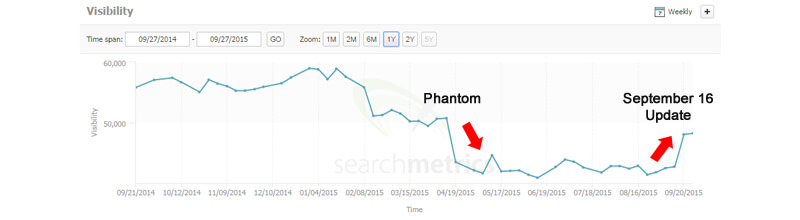

And I was right. After Phantom 2 rolled out in May of 2015, I projected a refresh of that algorithm in either late summer or early fall. I was close, as we saw the first movement with sites impacted by Phantom 2 in September of 2015. I saw sites start to recover while also seeing additional negative or positive impact during those updates. There was a clear connection with Phantom 2.

Phantom 3 in November of 2015 — It was BIG

Little did I know that we were in for a major tectonic shift soon after that. That’s when I picked up the November 2015 update (which many called Phantom 3). That was a huge update, with many sites seeing movement that were impacted by previous quality updates (and Phantom 2 in particular). Check out the trending below from several sites. You can clearly see the connection with Phantom 2.

January 2016: another quality connection?

Just as webmasters were settling down from Phantom 3 and the holidays, the Richter scale in my office started to rattle again. That was in January of 2016, which looked tied to previous quality updates as well.

Searchmetrics covered the update on their blog. I also saw a lot of movement during the January update. Additionally, Google confirmed that there was a core ranking algorithm change at that time.

A hot start to the summer of 2016: the June update

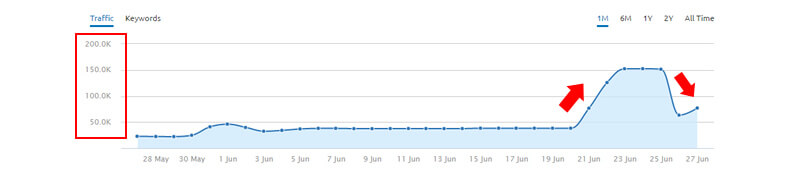

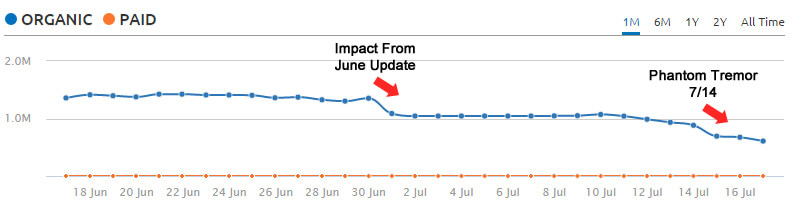

Then we had a red-hot start to the summer with the June 2016 quality update. That was one of the biggest I have seen, with many sites seeing substantial movement and volatility.

The connection to previous quality updates was extremely clear. After publishing a post covering the update, I had many companies reach out to me about movement they were seeing. That includes sites surging in rankings and traffic and others dropping significantly. Again, it was a big update.

Frequency of quality updates

It seems that there needs to be a data refresh, along with the algorithm rollout, in order for websites to see changes. Sound familiar (Cough, old-school Panda)? Let’s talk more about the data refresh.

Note: This is how I believe quality updates are working. Only Google knows the exact process. We know that Google’s quality updates must use data collected over time. And when the algorithm update rolls out, it uses the data that Google has collected and processed up to that point (or some point in the past). So even if Google collects more data beyond that date, it’s not until the algorithm rolls out again and uses the latest data that websites can see changes.

Again, I can’t say 100 percent that’s how Google’s quality updates are working, but it sure seems that way based on what I’ve seen since May of 2015. It’s doesn’t seem to be running all the time. Instead, it’s very old-school Panda-like with how it rolls out.

If the algorithm were real-time, then it could continually use fresh data to make decisions about where URLs should rank. But it doesn’t seem to do that (yet). Based upon what I have seen, a refresh is happening every several months; sometimes it’s every two or three months, and other times it’s every five or six months. Like Panda of the past, there’s not an exact time frame, but it’s great to see quality updates roll out every few months. I hope that continues.

New factors, or just a data refresh?

Also, since Google isn’t telling us much about its quality updates (or any core ranking algorithm change), then we don’t know if the algorithm is also being updated (factor-wise). For example, engineers could be adding new elements to the algorithm while also using fresh data. Based on what I’ve seen, I do think new elements are being added. More about that in part two of my series.

It’s also important to note that quality updates can stop at any point (be retired), or Google could advance the algorithm to real-time. Unfortunately, we won’t know anything about that, since Google rarely comments on its core ranking algorithm. Confusing, right? Welcome to Google Land. This is why it’s critically important to have access to a lot of data, across websites, countries, and categories. For example, the data set I have access to is across many sites dealing with quality problems over the years. It makes it easier to spot algorithm updates throughout the year.

Just like Panda tremors of the past, there are tremors after quality updates

Similar to what we saw with Panda, which was clarified by John Mueller, Google can refine an algorithm after a major update rolls out to ensure it’s producing optimal results. This is what I called “Panda Tremors,” since I saw that happen a lot after major Panda updates. Here is John’s response from 2014 after I noticed Panda 4.0 tremors:

Well, we’ve seen similar things with Google’s quality updates. Some sites see more negative impact, others see more positive impact, while others even reverse direction (This typically happens for a few weeks after a major algo update). It’s fascinating to analyze, and we saw it with the June 2016 update, too. It’s important to be aware of tremors, since they can cause more damage, more increase, or even reverse course. That’s why it’s important to monitor your site after a major algorithm update rolls out.

With the Phantom overview completed, it’s time to review factors (in part 2)

Here in part 1, I’ve covered what Google’s quality updates are, when I believe they’ve rolled out, recovery possibilities, data refreshes, tremors and more. If you’re interested in finding out what Phantom might be targeting based on many sites I’ve analyzed impacted by Google’s quality updates, then check out part two of my series here:

In part two, I cover a number of “low quality user experience” factors I’ve seen during my Phantom travels.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

New on Search Engine Land