Why Google’s quality updates should be on your algorithmic radar [Part 2]: The connection to low-quality user experience

In the second installment of his two-part series on Google's quality updates (aka Phantom updates), columnist Glenn Gabe explains the connection between user experience and organic search performance.

In part one of this series, I covered a number of important points about Google’s quality updates (aka Phantom). I tried to provide a solid foundation for understanding what Phantom is and how it works, explaining its history, its path since 2015 and how it rolls out.

In part two, I’m going to dive deeper by providing examples of “low-quality user engagement” that I’ve seen on sites affected by Phantom. I’ve analyzed and helped many companies that have been impacted by Google’s quality updates since May of 2015, and I’ve seen many types of quality problems during my travels. Today, I’ll try and explain more about those problems and provide some recommendations for sites that have been impacted.

So fire up your proton packs. There’s ectoplasm ahead.

Back to school, back to algorithm updates

First, a quick note about what we’ve been seeing this fall so far. September of 2016 was one of the most volatile months in a long time from an algorithm update standpoint. We’ve witnessed a serious combination of updates since August 31, 2016.

First, Joy Hawkins picked up a major local algorithm change that many in the local space are calling Possum. That was combined with what looked like another quality update starting on August 31 (I’m covering Google’s quality updates in this series). And to add to the already volatile September, Penguin 4 was announced on September 23 (which started to roll out before the announcement date).

And if you’re thinking, “Would Google really roll out multiple algorithm updates at one time?,” the answer is YES. They have done this several times before. My favorite was the algorithm sandwich in April of 2012, when Google rolled out Panda, then Penguin 1.0, and then Panda again, all within 10 days.

But again, I saw many sites impacted by the August 31 update that were also impacted by previous quality updates. Here are two sites that were impacted by the early September update with a clear connection to previous quality updates. And I’m not referring to Penguin impact, which seems to have started mid-month. I’m referring to sites without Penguin issues that have been impacted by previous quality updates:

I’m planning on covering more about this soon, but I wanted to touch on the September volatility before we hop into this post. Now, back to our regularly scheduled program.

Phantom and low-quality user engagement: hell hath no fury like a user scorned

After analyzing many sites that either dropped or surged during Google quality updates, I saw many examples of what I’m calling “low-quality user experience” (which could include low-quality content).

I’ve helped a lot of companies with Panda since 2011, and there is definitely overlap with some of the problems I surfaced with Phantom. That said, quality updates seem more focused on user experience than content quality. Below, I’ll cover some specific low-quality situations I’ve uncovered while analyzing sites impacted by Phantom.

It’s also important to note that there’s usually not just one problem that’s causing a drop from a quality update. There’s typically a combination of problems riddling a website. I can’t remember analyzing a single site that got hit hard that had just one smoking gun. It’s usually a battery of smoking guns working together. That’s important to understand.

Quick disclaimer: The list below does not cover every situation I’ve come across. It’s meant to give you a view of several core quality problems I’ve seen while analyzing sites impacted by Google’s quality updates. I cannot tell you for sure which problems are direct factors, which ones indirectly impact a site and which ones aren’t even taken into account. And again, it’s often a combination of problems that led to negative impact.

Personally, I believe Google can identify barriers to usability and the negative impact those barriers have on user engagement. And as I’ve always said, user happiness typically wins. Don’t frustrate users, force them a certain way, push ads on them, make them jump through hoops to accomplish a task and so forth.

One thing is for sure: I came across many, many sites during my Phantom travels that had serious quality problems (which included a number of the items I’m listing below).

Let’s get started.

Clumsy and frustrating user experience

I’ve seen many sites that were impacted negatively that employed a clumsy and frustrating user experience (UX). For example, I’ve looked at websites where the main content and supplementary content were completely disorganized, making it extremely hard to traverse pages within the site. Mind you, I’m using two large monitors working in tandem — imagine what a typical user would experience on a laptop, tablet, or even their phone!

I have also come across a number of situations where the user interface (UI) was breaking, the content was being blocked by user interface modules, and more. This can be extremely frustrating for users, as they can’t actually read all of the content on the page, can’t use the navigation in some areas and so on.

Taking a frustrating experience to another level, I’ve also analyzed situations where the site would block the user experience. For example, blocking the use of the back button in the browser, or identifying when users were moving to that area of the browser and then presenting a popup with the goal of getting those users to remain on the site.

To me, you’re adding fuel to a fire when you take control of someone’s browser (or inhibit the use of their browser’s functionality). And you also increase the level of creepiness when showing that you are tracking their mouse movements! I highly recommend not doing this.

Aggressive advertising

Then there were times ads were so prominent and aggressive that the main content was pushed down the page or squeezed into small areas of the page. And, in worst-case scenarios, I actually had a hard time finding the main content at all. Again, think about the horrible signals users could be sending Google after experiencing something like that.

Content rendering problems

Content rendering problems

There were some sites I analyzed that were unknowingly having major problems with how Google rendered their content. For example, everything looked fine for users, but Google’s “fetch and render” tool revealed dangerous problems. That included giant popups in the render of every page of the site (hiding the main content).

I’ve also seen important pieces of content not rendering on desktop or mobile — and in worst-case scenarios, no content rendered at all. Needless to say, Google is going to have a hard time understanding your content if it can’t see it.

Note, John Mueller also addressed this situation in a recent Webmaster Hangout and explained that if Google can’t render the content properly, then it could have a hard time indexing and ranking that content. Again, beware.

Deception (ads, affiliate links, etc.)

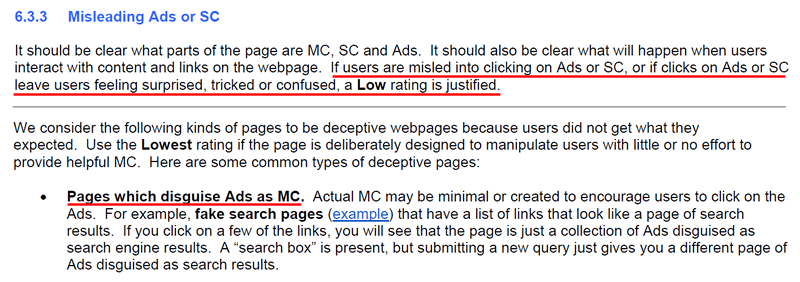

One of the worst problems I’ve come across when analyzing both Panda and Phantom hits relates to ad deception. That’s when ads are woven into the main content and look like the main content. So users believe they are clicking on links that will take them to additional content on the same site, but instead, they are whisked off the site downstream to an advertiser.

That could be a shockingly bad user experience for visitors, but the ad deception ride typically doesn’t stop there. Some of those downstream, third-party sites are extremely aggressive content-wise, contain malware, force downloads, and more. So combine deception with danger, and you’ve got a horrible recipe for Mr. Phantom. Again, hell hath no fury like a user scorned.

Quick case study: I remember asking a business owner who was negatively impacted by a quality update why a content module in the middle of their pages that contained ads wasn’t marked as sponsored (The ads were deceptive). They said that the click-through rate was so good, they didn’t want to impact their numbers. So basically, they kept deceiving users to maintain ad revenue — not good, to say the least. It’s a prime example of what not to do.

In addition, you have to look no further than Google’s Quality Rater Guidelines (QRG) to find specific callouts of ad deception, and how pages that employ that type of deception should be considered low-quality. I find many people still haven’t read Google’s QRG. I highly recommend doing so. You will find many examples of what I’m seeing while analyzing sites impacted by Google’s quality updates.

More excessive monetization

I also saw additional examples of aggressive monetization during my Phantom travels. For example, forcing users to view 38 pages of pagination in order to read an article (with only one paragraph per page). And of course, the site is feeding several ads per page to increase ad impressions with every click to a new page. Nice.

That worked until the sites were algorithmically punched in the face. You can read my post about Panda and aggressive advertising to learn more about that situation. Phantom has a one-two punch as well. Beware.

Aggressive popups and interstitials

First, yes, I know Google has not officially rolled out the mobile popup algorithm yet. But that will be a direct factor when it rolls out. For now, what I’m saying is that users could be so thrown off by aggressive popups and interstitials that they jump back to the search results quickly (resulting in extremely low dwell time). And as I’ve said a thousand times before, extremely low dwell time (in aggregate) is a strong signal to Google that users did not find what they wanted (or did not enjoy their experience on the site).

While analyzing sites negatively impacted by Google’s quality updates, I have seen many examples of sites presenting aggressive popups and other interstitials that completely inhibit the user experience (on both desktop and mobile). For some of the sites, the annoyance levels were through the roof.

Now take that frustration and extrapolate it over many users hitting the site, over an extended period of time, and you can see how bad a situation it could be, quality-wise. Then combine this with other low-quality problems, and the ectoplasm levels increase. Not good, to say the least.

It’s also important to note that Googlebot could end up seeing the popup as your main content. Yes, that means the amazing content you created will be sitting below the popup, and Googlebot could see that popup as your main content. Here’s a video of Google’s John Mueller explaining that.

The core point here is to not inhibit the user experience in any way. And aggressive popups and interstitials can absolutely do that. Beware.

Lackluster content not meeting user expectations

From a content standpoint, there were many examples of sites dropping off a cliff that contained content that simply couldn’t live up to user expectations. For example, I reviewed sites that were ranking for competitive queries, but the content did not delve deep enough to rank for those queries. (This often involved thin content that had no shot at providing what the user needed.)

Does this remind you of another algorithm Google has employed? (Cough, Panda.) Like I said, I’ve seen overlap with Panda during my Phantom travels.

As you can guess, many users were probably not happy with the content and jumped back to the search results. As I mentioned earlier, very low dwell time (in aggregate) is a powerful signal to Google that users were not happy with the result. It’s also a giant invitation to Panda and/or Phantom.

By the way, dwell time is not the same as bounce rate in Google Analytics. Very low dwell time is someone searching, visiting a page, and then returning to the search results very quickly.

I believe Google can understand this by niche — so a coupon site would be much different from a news site, which would be different from an e-commerce site. There are flaws with bounce rate in Google Analytics (and other analytics packages), but that’s for another post.

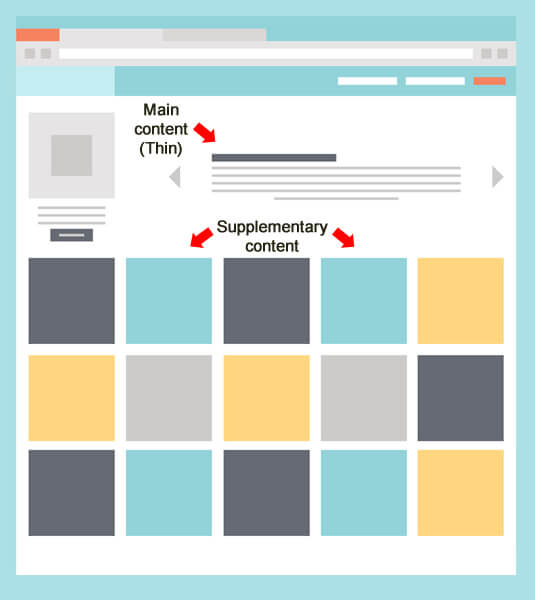

Low-quality supplementary content

I also saw many examples of horrible and unhelpful supplementary content. For example, if there’s an article on a specific subject, the supplementary content should be relevant and helpful. It shouldn’t contain a boatload of links to non-relevant content, contain deceptive and cloaked ads and so on.

It’s okay to have advertising, but make sure it can easily be identified as such. And help your users find more of your great content. Make sure the links you provide are relevant, helpful, and enhance the user experience.

Side note: Phantom eats rich snippets — scary, but true

One interesting side note (which I’ve mentioned in my previous posts about Phantom) is that when quality updates have rolled out, sites have gained or lost rich snippets. So it seems the algorithm has something built in that can strip or provide rich snippets. Either that, or Google is updating the rich snippets algorithm at the same time (tied to the quality threshold for displaying rich snippets that Google has mentioned several times).

So, not only can quality updates impact rankings, but it seems they can impact SERP treatment, too.

Net-net

Again, I chose to list several examples of what I’ve seen during my Phantom travels in this post, but I did not cover all of the low-quality situations I’ve seen. There are many!

Basically, the combination of strong user experience and high-quality content wins. Always try to exceed user expectations (from both a content standpoint and UX standpoint). Don’t deceive users, and don’t fall into the excessive monetization trap. That includes both desktop and mobile.

Remember, hell hath no fury like a user scorned. From what I’ve seen, users have a (new) voice now, and it’s called Phantom. Don’t make it angry. It probably won’t work out very well for you.

Exorcising the Phantom: what webmasters can do now

If you’ve been impacted by a quality update, or several, what can you do now? Below, I’ll provide some bullets containing important items that you can (and should) start today. And the first is key.

- If you’ve been impacted negatively, don’t wait. Even Google’s John Mueller has said not to. Check 30:14 in the video.

- Objectively measure the quality of your site. Go through your site like a typical user would. And if you have a hard time doing this objectively, have others go through your site and provide feedback. You might be surprised by what you surface.

- Perform a thorough crawl analysis and audit of your site through a quality lens. This is always a great approach that can help surface quality problems. I use both DeepCrawl and Screaming Frog extensively. (Note: I’m on the customer advisory board for DeepCrawl and have been a huge fan of using it for enterprise crawls for years.)

- Understand the landing pages that have dropped, the queries those pages ranked for, and put yourself in the position of a user arriving from Google after searching for those queries. Does your content meet or exceed expectations?

- Understand the “annoyance factor.” Popups, excessive pagination, deception, UIs breaking, thin content, user unhappiness and so on. Based upon my analyses, all of these are dangerous elements when it comes to Phantom.

- Use “fetch and render” in Google Search Console to ensure you aren’t presenting problems to Googlebot. I’ve seen this a number of times with Phantom hits. If Google can’t see your content, it will have a hard time indexing and ranking that content.

- Fix everything as quickly as you can, check all changes in staging, and then again right after you roll them out to production. Don’t make the situation worse by injecting more problems into your site. I’ve seen his happen, and it’s not pretty.

- Similar to what I’ve said to Panda victims I have helped over the years, drive forward like you’re not being negatively impacted. Keep building high-quality content, meeting and exceeding user expectations, building new links naturally, building your brand, and more. Fight your way out of the ectoplasm. Remember, Google’s quality updates seem to require a refresh. Don’t give up.

Summary: Panda is in stealth mode, Phantom is front and center

With Panda now in stealth mode, there’s another algorithm that SEOs need to be aware of. We’ve seen a number of Google Quality Updates (aka Phantom) since May of 2015, and in my opinion, they heavily focus on “low-quality user experience.” I highly recommend reviewing your analytics reporting, understanding if you’ve been impacted, and then forming a plan of attack for maintaining the highest level of quality possible on your website.

Good luck, and be nice to Phantoms this Halloween!

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories

Content rendering problems

Content rendering problems