SMX West: Solving SEO Issues in Google’s Post-Update World

Now that Google no longer regularly announced algorithm updates, it can be hard to tell when your site's been hit by one. Luckily, there are steps you can take to determine if you've been hit. Contributor Mark Traphagen sums up a session from SMX West on this topic.

How do we deal with huge and sudden changes in organic search traffic now that Google no longer posts major updates to its algorithms? Since many updates that formerly would have been assigned a name (like Penguin or Panda), or even a name and an incremental number (Panda 3.0) are now part of Google’s core algorithm, how can we know when an update is responsible for a traffic or ranking change? And even if we do know, what can we do with that knowledge?

Kristine Schachinger and Glenn Gabe answered those questions and more in “Solving SEO Issues In Google’s Post-Update World,” a session at SMX West 2017. The following is a summary of their presentations.

Busting Google’s black box

Presenter: Kristine Schachinger, @schachin

Some SEOs and webmasters may find themselves wishing for the days of Matt Cutts. Cutts was a Google engineer and the head of Google’s webspam team. More importantly to marketers, Matt was the “face” of Google search, regularly speaking at conferences, creating informative videos and posting news about Google search on social media. During Cutts’ tenure at Google, he could usually be depended upon to provide announcements of major algorithm updates.

Then two things happened: Matt Cutts left Google to serve with the US Digital Service, and Google stopped announcing most algorithm updates.

Since Cutts’ departure, the role of Google spokesperson has been split among several Googlers, most prominently Gary Illyes and John Mueller. But these new spokespeople don’t fulfill the same role as Matt Cutts, and they don’t necessarily have his level of insight into the algorithms (Cutts actually helped write the search algorithms). Illyes and Mueller have seemed more reluctant than Cutts was to confirm or speak about possible updates. In addition, Google announced that many key updates had been “rolled up” into the core algorithm. That meant that they would be regularly tweaked, but there would no longer be major updates that would be announced.

Other changes have affected the ability of SEOs and webmasters to assess whether changes to their traffic are the result of Google updates. For example, Penguin is now real-time, which means it finds questionable links during normal crawls and devalues them as it crawls the sites to which they link. Gone are the days when webmasters had to wait, sometimes for months or even years, for the next major update of Penguin before their sites could recover from a penalty. But that also means it’s less easy to assess whether Penguin is to blame for a traffic loss.

Did you get burned?

So now, when a webmaster or SEO notices a sudden drop in organic search traffic, the question of whether an update by Google (and which one, if any) is to blame is a lot harder to answer. Fortunately, there are third-party sites and SEOs who keep track of major fluctuations in ranking and serve as a “first alert” network for possible algorithm updates. Schachinger recommended following these:

- Glenn Gabe

- Google Webmaster Central Blog

- Gary Illyes

- John Mueller

- Search Engine Roundtable (Barry Schwartz)

- The SEM Post (Jennifer Slegg)

Maybe yes, maybe no

However, the fact that an update may have happened on or near the date of your traffic or ranking loss does not mean the update was to blame. You need to become a detective, and first eliminate all other possible causes. Treat your site history like a medical history. For example, if you’ve had a problem with bad links in the past, you might still have it (or have it again).

You need to beware of falling for “ducks” (just because it walks like a duck, and talks like a duck…).

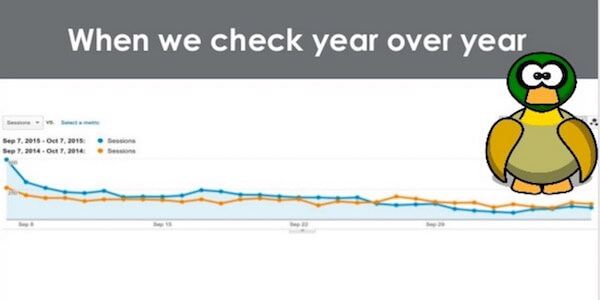

For example, this looks like a penalty:

However, a deeper investigation into the analytics history reveals that this is a normal seasonal dip that takes place every year:

The bigger picture

We’re never going to achieve perfection in our SEO, since we can never know exactly all the factors that affect ranking and traffic behavior. So our aim should be to measure our “distance from perfect” (a concept coined by Portent Digital Marketing). That is, try to determine how close or far your site is from the ideals a search algorithm looks for.

First ask which (if any) algorithms might be affecting your site.

- Internal algorithms (on-page, technical, etc.)

- Panda, Phantom, Penguin

- External algorithms (links, citations, etc.)

- Penguin

- Third-party influence algorithms (ads, etc.)

- Panda, Phantom

Let’s look at some of those core algorithms in a bit more detail.

Panda

This algorithm update is focused primarily on content quality, but also some elements of site quality. The last confirmed Panda update was in October of 2015. According to Gary Illyes of Google, Panda is now part of Google’s core ranking algorithm.

Here is what you need to know about Panda and content quality:

- Frowns on “thin” content. Length of content can matter (as a signal of time, effort and completeness), but there are exceptions.

- Duplicate content: NOT a penalty, but it does confuse Google as to which page you want to have ranking. Make sure all your titles and descriptions are unique.

- Content clarity: make sure all your content is well-written and focused on its topic.

- Content specificity: make sure content is relevant to your key topics.

- Content length (these are all rough suggestions, not hard and fast rules!)

- Blog posts should be about 35–550 words.

- In-depth articles 750 words+.

- Content technical issues: grammar, spelling, readability

- Site architecture problems (confirmed by John Mueller in February 2017)

- Too many advertisements on page, especially if they make it difficult to find the content

- Site load time: especially TFB (time to first byte). Should be under .80ms.

Phantom

Phantom was the name given by SEOs to an unnamed Google quality update. Phantom is sometimes referred to as “Panda Plus.” It has a wider quality focus than Panda, including UX/UI issues.

Similar to Panda, Phantom focuses on low-quality pages, though it can change its focus from time to time — it acts as a sort of catch-all quality algorithm. Updates are typically not confirmed by Google.

Likely areas Phantom goes after:

- Aggressive ad placements

- Content issues

- Crawl issues

- Deceptive ads, hidden affiliate links, any attempts to deliberately deceive visitors

- Thin content

- Bad UI/UX

- Interstitials

Panda or Phantom? How can you know? The easy answer: it doesn’t matter! Remember, your goal should always be to reduce your “distance from perfect.” Assess your site by the things we know Google looks for in a good site, and adjust accordingly.

Penguin

Originally launched in April 2012, Penguin focuses primarily on spammy or “unnatural” links, but it includes some other site quality factors as well.

Penguin updates 1.0–3.0 were “angry.” They went after the rankings of entire sites, and a penalized site could not recover until the next update, which could take months, or even years.

Penguin 4.0 was a gift, by comparison. It is now “real-time” (not instant, but demotions and recoveries take place at the time of a site recrawl), and granular, meaning Penguin affects single pages and not the whole site. Gary Illyes noted that this update now devalues spam rather than demoting a site’s rank.

Real-time Penguin is good news, since adjustments occur as soon as the page is crawled; however, Penguin is now harder to detect, since now only individual pages or sections of a site will drop in ranking, not the whole site.

Your pages can also be hit by an indirect Penguin effect, if some of the pages that link to them are hit by Penguin. The links from those pages to your pages will no longer pass along the ranking power they once did.

The only way to really recover now if Penguin impacts pages on your site is to get quality links to those pages to replace the ones that Penguin devalued. Google says you should still disavow any bad links to your site that you find, but they don’t say why.

Manual penalties

Sites can still be given manual penalties. Manual penalties occur when suspicious links and/or poor site quality or other search manipulations bring a site up for review by human assessors at Google. A manual penalty can still affect a whole site.

Manual penalties still require action to remediate the problem, and recovery requires a request for reconsideration.

Mobile updates

If you’re noticing specific issues with mobile traffic only, it might be due to a mobile algorithm update. Here are the main ones to keep track of:

Mobile-friendly update

For the last couple of years, Google has evaluated sites according to their “mobile-friendliness,” meaning that the site’s presence on mobile devices presents a good user experience. A “mobile-friendly” label used to appear next to sites that met this threshold in the search results, but no longer does. Google has announced two “mobile-friendly” algorithm updates so far, which allegedly devalue the ranking of non-mobile-friendly sites in mobile search results.

Intrusive ads & interstitials update

In August 2016, Google announced that it would begin to devalue the mobile rankings of sites that had certain kinds of interstitials that prevented users from being able to immediately see the actual content upon page load. In January 2017, they confirmed that the update had rolled out. So far, the rollout has been weak.

The interstitial penalty applies to the first click from a search results page only. Interstitials that load as a user navigates to other pages on the same site will not trigger the penalty.

Types of interstitials this update is supposed to be aimed at:

- Popups that cover the main content, either immediately after a page opens from a search result or while scrolling down that first page.

- Standalone interstitials that must be dismissed before the user can view the content.

- Layouts where the above-the-fold appearance acts as the equivalent of an interstitial, and the actual content must be scrolled to.

Some interstitials are not affected by the update and are still allowed:

- Interstitials required for legal obligations, such as informing the user of cookie use or for age verification.

- Login dialogs for non-public content requiring a password for access.

- Banners that use a reasonable amount of screen space and are easily dismissed, such as the app install banners provided by Safari and Chrome.

Final thoughts

- Keep decreasing your “distance from perfect” as your aim and you should not have to worry about algorithmic penalties.

- Remember that Google has said that content and links to your site are its two highest ranking factors. Work hard to maintain high quality with both.

- Assess your content quality according to the EAT acronym in Google’s quality guidelines: Expertise, Authority and Trust.

- The technical SEO health of your site could be considered ranking factor “2.5.” Often just eliminating technical problems can result in a traffic increase.

- Pay attention to increasing the speed of your site, especially on mobile. Use WebpageTest.org and Google’s Pagespeed Insights to test the speed of your site.

- Keep up to date on Google’s plans for mobile-first indexing. Mobile-first indexing is Google’s plan to eventually base its rankings for both mobile and desktop on the mobile version of sites. This means that you must have all the content for which you want to rank available in the version of your site visible on mobile sites.

- If you have a mobile m. site, make sure it is verified in Google Search Console.

- If you have a responsive site, no changes are needed.

- If you have AMP content only, you will be evaluated on your desktop content, but with a mobile-friendly boost.

- Unlike desktop, content hidden in accordions or tabs will not be devalued.

- If your site removes schema from mobile pages, you must add it back in order to get search snippets.

How to Track Unconfirmed Algorithm Updates

Presenter: Glenn Gabe, President G-Squared Interactive @glenngabe

The one constant of Google is that change is constant. Google pushes out between 500 and 1,000 changes every year. Some of those are major, most are minor. We also need to be aware that Google will sometimes roll out multiple updates at the same time.

Most algorithm updates are not announced, and these days that includes even the major ones. We were lucky to at least have Phantom (a name created by Gabe, but adopted by the SEO industry) confirmed in May 2015. A few updates are pre-announced, especially ones that Google wants to use to change webmaster behavior, such as the Mobile-Friendly and Mobile Interstitial updates. But most are neither announced nor confirmed.

So, how do we keep track of updates?

We have to assemble a mixture of human “barometers” and technology to identify major updates. Keep an ear to the ground and use the following as your alert system:

- Your own data and rank tracking

- Search Engine Roundtable

- SEOs who focus on algo updates (such as Gabe)

- Third-party search visibility tools

- Twitter chatter in the SEO community

- Webmaster forums

- SEO weather-tracking tools (such as Mozcast)

It’s important to note the date when you saw any radical change in your rankings and traffic, as you may be able to correlate that with any updates noted by the sources above. Major updates still occur every few months.

How to prepare for battle with updates

You should have the right data and tools at hand to help you assess if updates have affected your site. Use third-party data to supplement your own. Also gather and track data from your niche (SEMrush is Gabe’s favorite tool for seeing this data from competitive sites). Be proactive, not reactive; try to spot possible problems before they get out of hand.

Be sure to track your ranking as well. Select a strong bucket of keywords from your niche. You need way more than your “top 10.” Track your competition as well. You’re looking for movement across an entire niche, not just your own site. Use tools like SEMrush, STAT, RankRanger, Moz or others to do this.

But no matter how intensive your efforts, you can’t rank-track the entire web. Tools such as SEMRush, SearchMetrics and Sistrix, however, do monitor many thousands of sites across the web, and so can show more accurately when major fluctuation in ranking is occurring.

Algorithm weirdness

Sometimes Google will reverse a previous algorithm update. Or there can be “tremors” after a major update, in which some sites initially go down but after some corrections to the algorithm, go back up again.

Sometimes algorithm updates don’t work as expected. For example, Google’s mobile interstitial update was launched on January 10, 2017 (See description in Kristine Schachinger’s presentation above). Gabe has tracked over 70 sites that should have been penalized by the update but has seen no widespread movement at all. The Mobile Interstitial Update appears to be a dud (so far).

Using Google tools and your own data

Nothing is better than your own data, particularly from Google Search Console (GSC) and your own analytics. Both GSC and Google Analytics have APIs, so you can download data to do your own more detailed analysis. You can also automate certain tasks so you are taking a regular pulse (more about that below).

Google Search Console

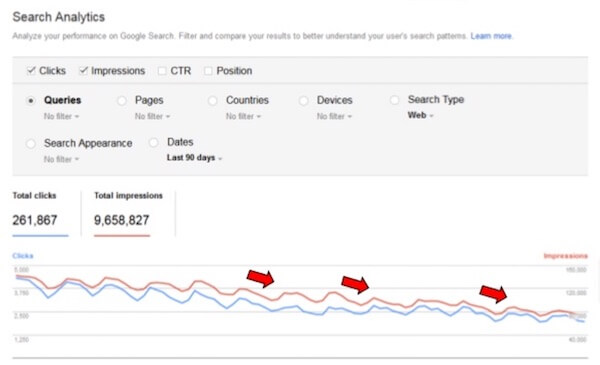

In Google Search Console, you’ll want to focus on the Search Analytics report. Track your clicks and impressions over time. Also watch Average Position (though that can be a bit funky) over time to identify increases and decreases in ranking.

Here’s an example of a gradual decrease in ranking during a recent core ranking update:

In Google Search Console, you can also compare time frames to one another and sort by loss of clicks or impressions. Just be aware that there is a limitation of 1,000 rows per report.

Now it’s time to slice and dice your data. Isolate areas of your site by filtering the Pages group, or isolate keywords by filtering the Queries group. You can then view trends in the filtered data. GSC does not support Regex, but you can export the data to do your own manipulations. Gabe shared that his favorite tool for bulk-exporting GSC data is Analytics Edge. With this tool, you can download queries and landing pages way beyond GSC’s 1,000-page limit, and then use the power of Excel to dig deeper. Gabe has written two blog posts about this process, here and here.

Rich snippets and algorithm updates

Rich Snippets are featured search results that display data or content from your page as part of the result. They also can be affected by algo updates. You can track your site’s appearance in rich snippets by using the GSC Search Appearance group and filtering by “rich results.”

You can also check the SERP Features widget in SEMrush to see if your rich snippets have been impacted by an algorithm update. For example, this feature will show you the number of times reviews are showing up for your domain, and the keywords that are triggering those reviews.

Google Analytics

First and foremost, make sure you are isolating Google organic traffic. You can also check organic search channel reporting for unsampled data (although that will include all search engines).

Here’s an example of a site that had been hit by Panda 4.0 but experienced a surge during the February 7, 2017, core ranking update:

Now it’s time to dig deeper, to the level of your individual pages. Dimension by landing pages, check the trends, and compare what you see to the previous time frame.

Filtering is key here. You can filter by directory (or via page type). In the advanced filters under advanced search, you can use regular expressions as well.

If you think you’ve been hit by Panda, it’s smart to check the landing pages from organic search where traffic dropped. Often you’ll see glaring problems on those pages, and then you’ll know what to fix. Gabe wrote a tutorial for running such a report in Excel that you can use for any update.

Other search engines

Another source to check in trying to confirm an algo update and its possible effects is search engines other than Google. Since these updates are unique to Google, if you see a parallel drop in traffic on both Google and Bing (for example), it probably indicates a technical problem with your site, and not a penalty or algorithm change. And remember to segment out Google from all other organic traffic. Of course, what you see in GSC is all Google.

Mobile SERPs and traffic

You should also look at your mobile organic search traffic separately. If all the drop is there, then you have a problem with how your mobile site is set up, or with one of Google’s mobile-specific updates (Mobile Friendly, Interstitials, and in the future, Mobile First Indexing).

In GSC, use the devices group to check clicks and impressions from mobile devices. Compare this to your desktop results, and be sure to include average position in your assessment.

In Google Analytics, create a segment for mobile traffic, then use that segment to assess with the recommendations made above. If you find that mobile pages dropped more than desktop pages, dig in and find out why.

A final word about ‘Fred’

An apparent major update that happened around February 7, 2017, is a good case study in how these updates look now, and what kind of response we can expect from Google. On that date, many sites surged, while others tanked. Almost all the tools mentioned earlier showed large volatility on or around that date, so it was easy to see. Initially, no one at Google would confirm the update, but when several on Twitter pressed Gary Illyes about it, he jokingly named it “Fred” after one of his pet fish, and the name stuck.

Here’s an example of two sites impacted by Fred in opposite ways:

Final tips and recommendations

- Be proactive, not reactive.

- Use a multifaceted approach for tracking algorithm updates.

- Set up rank tracking.

- Monitor search visibility across your niche.

- Track those “human barometers,” and let them know if you think you see something significant.

- Monitor industry chatter.

- Leverage the full power of Google tools (GSC and GA).

- Surface your quality problems, fix them, and always work to improve your overall quality.

- Keep moving forward and wait for the next update (could be months away).

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

Related stories