Ask the SMXperts — Page speed, site migrations and crawling

Our SMXperts share their tactical insights on optimizing crawl budgets, fine-tuning page speed and how to execute on large-scale error-free site migrations.

The Ask an SMXpert series continues the Q&A segment held during sessions at SMX Advanced 2018 in Seattle.

Today’s Q&A is from the Advanced Technical SEO: Page Speed, Site Migrations and Crawling session featuring Melody Petulla and Brian Ussery.

Session overview

Technical SEO has always been a critical component of search marketing; it is one of the foundations of ranking success.

In this session, our experts dove into tactics for optimizing crawl budget, fine-tuning page speed and how to execute on large-scale error-free site migrations. They covered:

- An in-depth understanding of the factors that impact what, when and how much of your site content search engines will crawl.

- Tips and tricks on how best to maximize your site’s “crawl budget” to ensure your most important content remains forever accessible.

Melody Petulla, Merkle

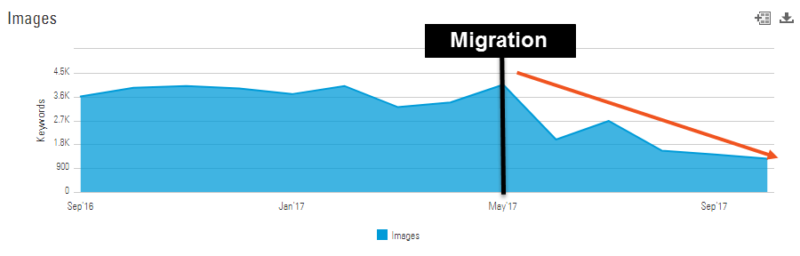

Question: Do you recommend 301 redirecting image URLs when changing domains?

Melody: Excellent question! Yes, redirecting your images can definitely help preserve ranking signals for image search, and we’ve seen similar declines in image rankings/traffic for sites that fail to redirect images.

If you think about it, images build up the same types of ranking signals over time as regular web pages. Failing to redirect images would essentially be seen by bots as releasing a set of entirely new images; just like web pages, it will take some time for those new resources to build up the ranking signals needed to be competitive in image search.

That said, traffic to images usually only makes up a tiny percentage of overall organic traffic; analyzing those numbers can help you prioritize image redirects in your overall migration plan.

Question: What/where are the key places you monitor in the early days/weeks of a migration to ensure a smooth transition took place?

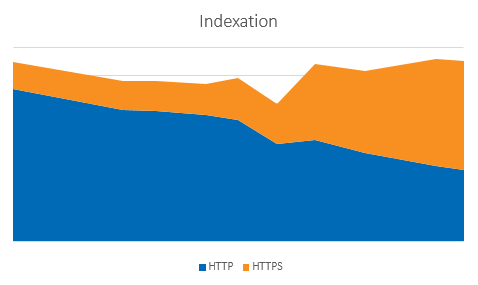

Melody: First and foremost, we like to look at indexation (both of the old site and the new site). This can be done either through the Index Status/Index Coverage reports in Google Search Console (GSC) or via site:example.com searches directly in Google.

We usually like to look at both to cover our bases. Since it can take some time (sometimes months) for rankings and traffic to normalize, those can be hairy metrics to look at for judging migration success in the short term.

You likely will not see indexation change over all at once, but you should see steady de-indexation of the old site at the same rate that the new site is being indexed. As long as indexation continues to change at a steady rate, and combined indexation of both the old and new sites looks normal, you should be in good shape.

Watch for both significant increases and declines in combined indexation; if you start to notice significantly more or fewer total indexed pages than you were expecting, there may be unexpected errors hiding beneath the surface. In addition to indexation, make sure to monitor crawl error reports regularly to try to catch as many issues as possible as quickly as possible.

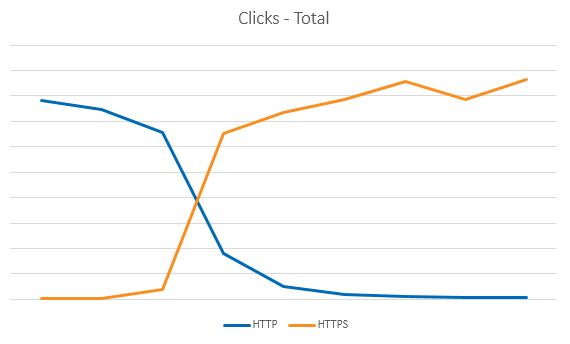

Traffic and impressions are great long-term metrics as well. Like indexation, you should see traffic and impressions shift at an equal rate from the old site to the new. Just keep in mind that traffic issues can be a symptom of many different things, so use it as a supplemental metric, not the primary key performance indicator (KPI). In general, though, regular performance reporting should include traffic from both sites at least for a few months to make sure it’s comprehensive

Question: Have you migrated a subdomain before, and do you have any takeaways to share?

Melody: We’ve migrated quite a few subdomains, actually (both changing from subfolder to subdomain and vice versa). I can’t speak conclusively to the performance impact of changing between subfolders and subdomains, but from a migration standpoint, they operate largely like any other migration with all of the same requirements.

The main thing that’s different between subdomain migrations and simple URL structure changes is that subdomains do require their own XML sitemaps and robots.txt files separate from your core subdomain, so make sure you’re addressing those appropriately.

Additionally, we’ve found that moving URLs from a www to a separate subdomain (or vice versa) can require more advanced redirect logic if you need to make additional changes to the URL structure after the .com to fit the new environment/platform. Make sure to use 1:1 redirects with no redirect chains if possible.

Question: After a migration (URL, not domain), if I’m still in the recovery period (eight to 10 weeks out), should I avoid making other changes to the site? Or can I resume A/B testing and content updates?

Melody: It should be totally fine to continue making other changes to the site (as long as they do not disrupt your redirects). The only things that might be best to defer until indexation has fully changed over are changes to canonicals or noindex that could confuse your indexation signals. Sending confusing signals or signals that are changing constantly could extend the time it takes for bots to properly update the index with your new intended pages.

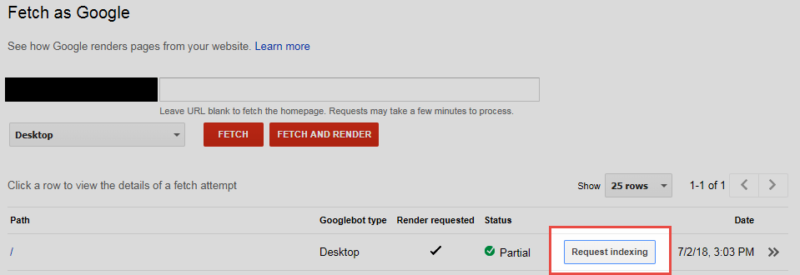

Question: After a migration: When you use the “Fetch” tool in GSC to submit primary pages, how deep does Google crawl from that submitted page? Meaning: Do they only crawl all links on that submitted page? Or will they crawl even deeper?

Melody: As far as I know, you can elect to have Google crawl all of the links that exist on the page you’re submitting, but they will not crawl deeper simply from using the Fetch as Google tool. For that reason, we recommend submitting a variety of pages at different levels on the site. Note that you can also continue submitting different pages on a regular cadence to help deeper pages get crawled a bit faster.

Brian Ussery, SapientRazorfish

Question: Do you recommend 301 redirecting image URLs when changing domains?

Brian: Google recommends 301 redirecting old image URLs to new image URLs. Google Webmaster Trends Analyst John Mueller addressed this topic during a 2016 Webmaster Hangout:

From a resource perspective, I typically only recommend a 301 redirecting images that rank well and drive search traffic for keywords aligned with business goals, not generic images, spacers, backgrounds and/or similar.

Google does not crawl images as frequently as pages, so it can take a long time for Google to determine images have moved without redirects. Googlebot discovers images during the regular crawl process and earmarks them for later crawling by Googlebot-Image. When an image is indexed in a URL that longer exists, Google has to expend additional resources to determine what happened. Redirecting old image URLs sends a strong signal that an image has moved. Because Google does not use signals like rel=canonical for images, 301 redirecting is really the only option in this scenario.

Don’t forget to ensure images are visually identical, or optimize new images for speed. For instance, removing unnecessary images from new pages, using optimal formatting, compression and checking things like bit depth and reducing palette size when possible.

Question: What/where are the key places you monitor in the early days/weeks of a migration to ensure a smooth transition took place?

Brian: After a migration, I manually monitor as many pages as possible on desktop and mobile, as well as analyze data from tools like Screaming Frog, search console, PageSpeed insights, Google’s mobile-friendly tool and analytics. It is important to remember analytics only tells you what users did, not what they intend to do.

Don’t forget to check and start monitoring the search engine result pages. Upload categorized XML sitemaps and submit them to Bing and Google in addition to adding the URL to robots.txt. Fetch new pages and their direct links via Google Search Console.

For e-commerce sites, watch out for HTTP to HTTPS mismatch browser errors and ensure users can actually add things to the cart as well as checkout.

Once the new site is confirmed operational, I check old site URLs to ensure they are properly redirected and for things like redirect chains or soft 404s.

Question: After a migration (URL, not domain), if I’m still in the recovery period (eight to 10 weeks out), should I avoid making other changes to the site? Or can I resume A/B testing and content updates?

Brian: After a site migration, and depending on the size of the site, I would not recommend making unnecessary additional changes for several months if at all possible.

Question: I noticed you didn’t mention upgrading to HTTP2 as one of your 10 suggestions. Was this because it’s a higher level of effort than the things you can do now? Or was it because you don’t see HTTP2 having that much impact?

Brian: HTTP2 is a great way to help improve performance but is currently only leveraged by about 30 percent of websites. I did not mention HTTP2 specifically because it does require more effort as well as selling it to stakeholders at the enterprise level. Sometimes it is easier to build a case for the C-suite when you can say we have done everything else we can do except HTTP2.

Question: Assuming one is not concerned with performance degradation, is crawl budget a concern?

Brian: Crawl budget is a concern when it comes to crawl efficiency, coverage, new pages, freshness and other factors. Engines can only spend so much time crawling your site. You don’t want engines spending all of that time crawling URLs that return a 404. You want efficient, even coverage to help ensure freshness and for engines to find new pages.

Question: After a migration: when you use the “Fetch” tool in GSC to submit primary pages, how deep does Google crawl from that submitted page? Do they only crawl all links on that submitted page? Or will they crawl even deeper?

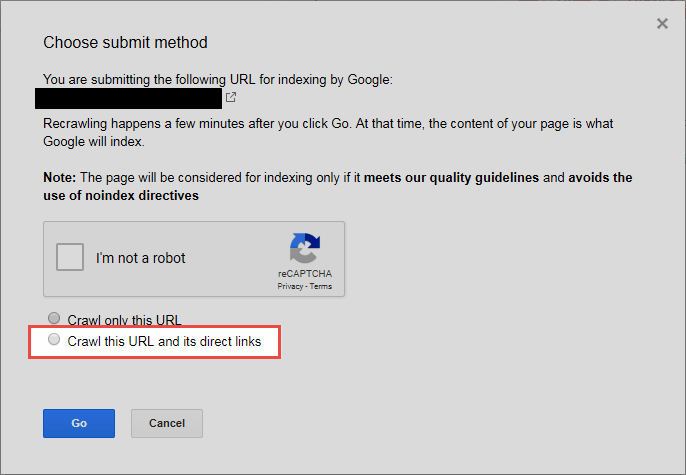

Brian: When you Fetch As Googlebot and select “recrawl this URL and its direct links,” you are essentially submitting the requested URL as well as all the pages that page links directly to.

You can submit up to two site recrawl requests per day.

There is no guarantee, but eventually, Google will crawl deeper than the pages linked from the URL submitted.

Want to learn more? Our ever-growing library of SMXpert articles offers an in-depth look at tips, tactics and strategies from our SMXperts. Check it out!

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories

New on Search Engine Land