3 Steps To Find And Block Bad Bots

Is your Web analytics data being skewed by bot visits to your site? If so, columnist Ben Goodsell has the solution.

Most SEOs have heard about using Log Files to understand Googlebot behavior, but few seem to know they can be used to identify bad bots crawling your site. More and more, these bots are executing JavaScript, inflating analytics, taking resources and scraping and duplicating content.

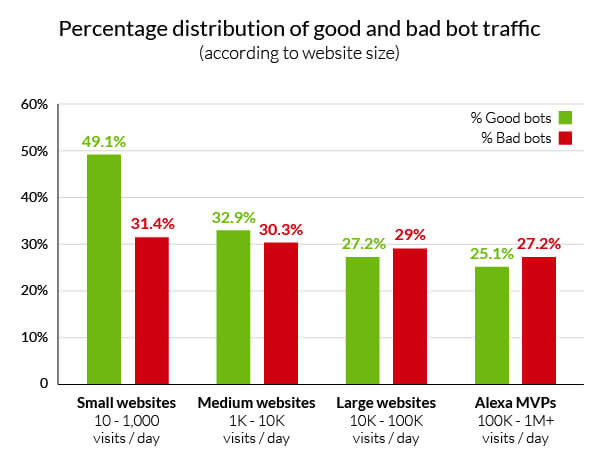

The Incapsula 2014 bot traffic report looked at 20,000 websites (of all sizes) over a 90-day period and found that bots account for 56% of all website traffic; 29% were malicious in nature. Additional insight showed the more you build your brand, the larger a target you become.

While there are services out there that automate much more advanced techniques than what’s shown here, this article is meant to be an easy starting point (using Excel) to understand the basics of using Log Files, blocking bad bots at the server level and cleaning up Analytics reports.

1. Find Log Files

All servers keep a list of every request to the site they host. Whether a customer is using the Firefox browser or Googlebot is looking for newly created pages, all activity is recorded in a simple file.

The location of these log files depends on the type of server or host you have. Here are some details on common platforms.

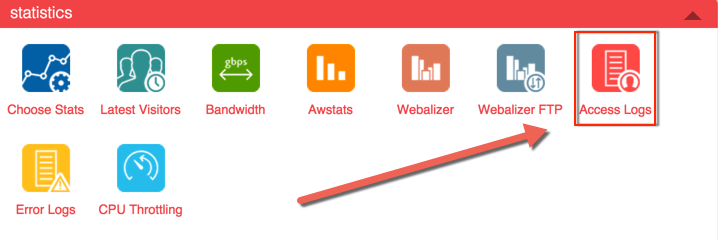

- cPanel: A common interface for Apache hosts (seen below); makes finding log files as easy as clicking a link.

- Apache: Log Files are typically found in /var/log and subdirectories; also, using the locate access.log command will quickly spot server logs.

- IIS: Microsoft servers “logging” can be enabled and configured in the Internet Services Manager. Go to Control Panel -> Administrative Tools -> Internet Services Manager -> Select website -> Right-click then Properties -> Website tab -> Properties -> General Properties tab.

2. Identify Number Of Hits By IP & User Agents

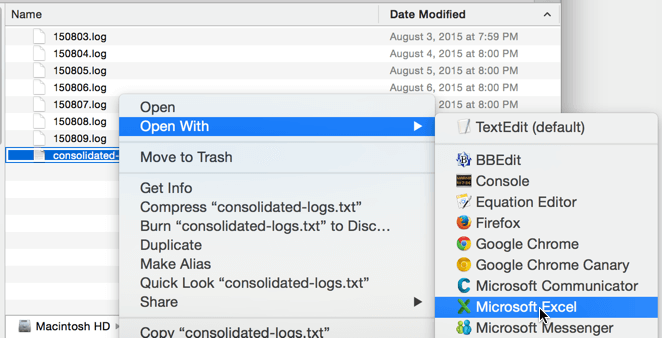

Once files have been found, consolidate, then open in Excel (or your preferred method). Due to the size of some log files, this can often be more easily said than done. For most smaller to medium sites, using a computer with a lot of processing power should be sufficient.

Below, .log files were manually consolidated into a new .txt file using a plain text editor, then opened in Excel using text-to-columns and a “space” delimiter, with a little additional cleanup to get the column headers to line up.

Find Number of Hits by IP

After consolidating and opening logs in Excel, it’s fairly easy to find the number of hits by IP.

To do this:

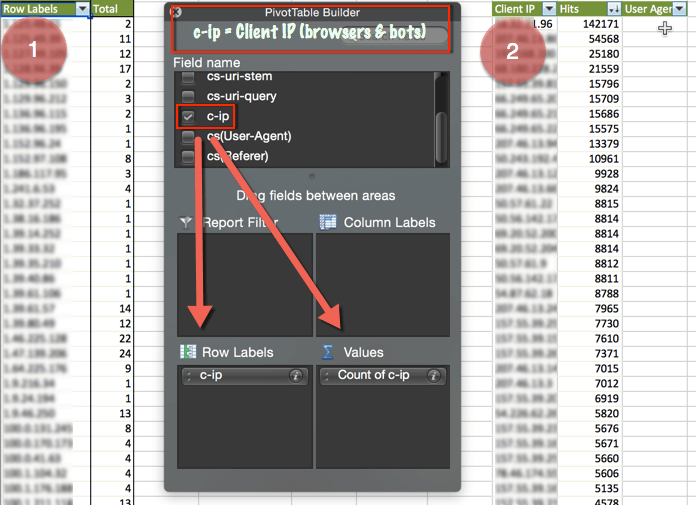

- Create a Pivot Table, look at Client IP and get counts.

- Copy and paste, rename column headers to Client IP and Hits, sort by descending, then finally insert a User Agent column to the right of Hits.

Find User Agents By IP

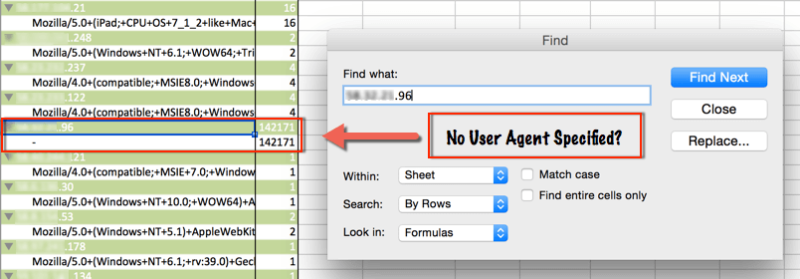

As a final step in identifying potential bad bots, find which user agents are associated with IPs hitting your site the most. To do this, go back to the pivot table and simply add the User Agent to the Row Label section of the Pivot Table.

Now, finding the User Agent associated with the top-hitting IP is as simple as a text search. In this case, the IP has no declared User Agent (was from China) and hit the site over 80,000 times more than any other IP.

3. Block IPs From Accessing Site And Displaying In Analytics.

Now that the malicious IP has been identified, use these instructions to prevent number inflation in Analytics, then block that IP from accessing the site completely.

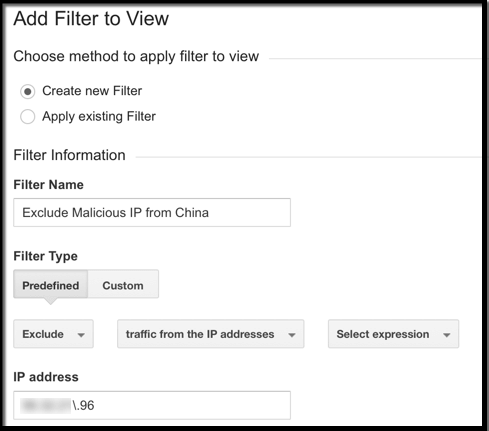

Blocking An IP In Analytics

Using Filters in Google Analytics, you can exclude IPs. Navigate to Admin -> Choose View (always a good idea to Create New View when making changes like this) -> Filters -> + New Filter -> Predefined -> Exclude traffic from the IP addresses -> Specify IP (regular expression).

Tip: Google Analytics automatically blocks known crawlers identified by IAB (a $14,000 value for non-members). Just navigate to Admin -> View Settings, and under where it says “Bot Filtering,” check “Exclude all hits from known bots and spiders.” It’s always a best practice to create a new view before altering profile settings.

If you use Omniture, there are three methods to exclude data by IP.

- Exclude by IP. Excludes hits from up to 50 IPs.

- Vista Rule. For companies that need more than 50.

- Processing Rule. It’s possible to create a rule that prevents showing data from particular IPs.

Blocking An IP At The Server Level

Similar to identifying where the log files are located, the method of blocking IPs from accessing your site at the server level changes depending on the type of server you use.

- cPanel: Using the IP Address Deny Manager, IPs can be blocked and managed on an ongoing basis.

- Apache: mod_authz_host is the recommended module for this, but the .htaccess can also be used.

- IIS: Open IIS Manager -> Features View -> IPv4 Address and Domain Restrictions -> Actions Pane -> Add Deny Entry.

Conclusion

Third-party solutions route all traffic through a network to identify bots (good and bad) in real time. They don’t just look at IPs and User Agent Strings, but also HTTP Headers, navigational site behavior and many other factors. Some sites are using methods like reCAPTCHA to ensure their sites visitors are human.

What other methods have you heard of that can help protect against the “rise of the bad bots?”

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

Related stories